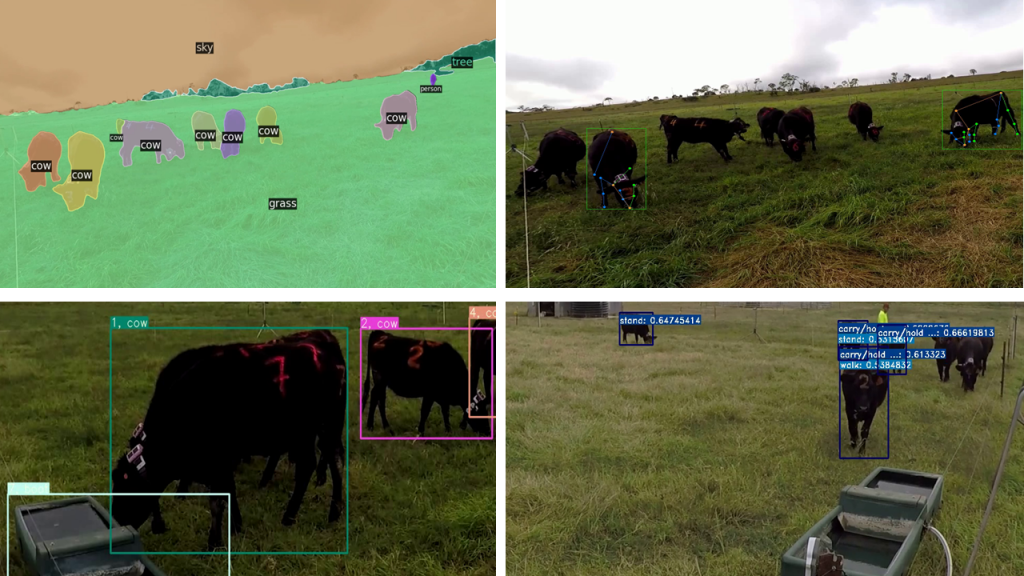

Livestock Behavior Recognition:

Livestock-derived foods have an important role in reducing stunting, malnutrition (including poor growth), and micronutrient deficiencies. These are particularly adequate for young children who are in stages of rapid growth and development as well as pregnant and lactating women who need high micronutrients but small intake volumes.

To meet these demands, animal products need to be of good quality with sufficient and relatively cheap access to the general public without compromising on animal welfare.

Understanding livestock behaviors could help us better manage stress, and early disease detection, and assist with breeding selection to increase the productivity and quality of animal-driven products.

This project uses various computer vision and machine learning approaches that can detect all instances of the particular animal in the scene and then using a variety of characteristics classify behavior of each animal over time.

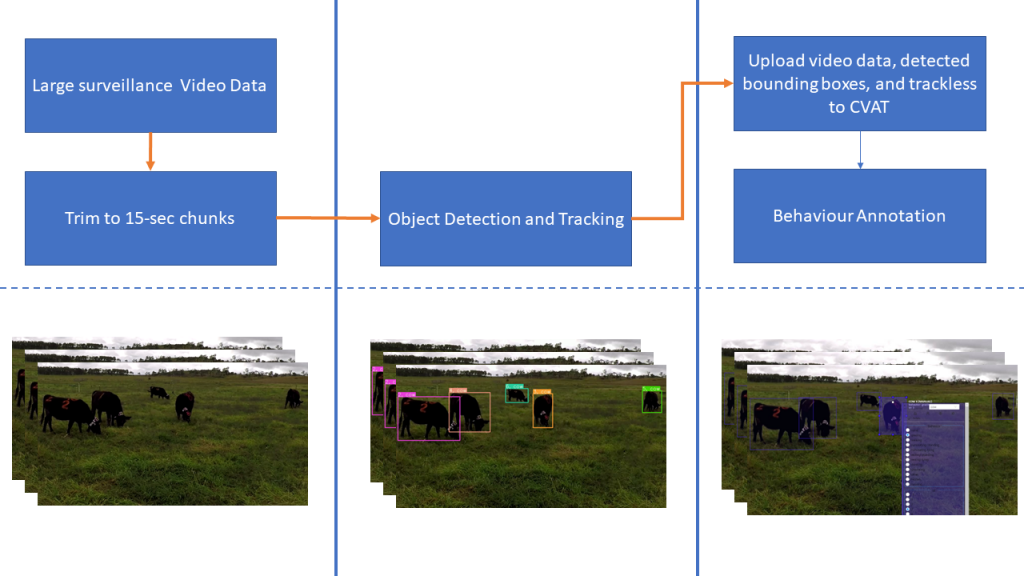

Automatic Livestock Behaviour Annotation:

Doing video frame-by-frame annotation for livestock is a cumbersome Job. An app based on CVAT was developed to help annotate the behavior of animals much faster. This app facilitates the annotators to only annotate when the behavior changes.

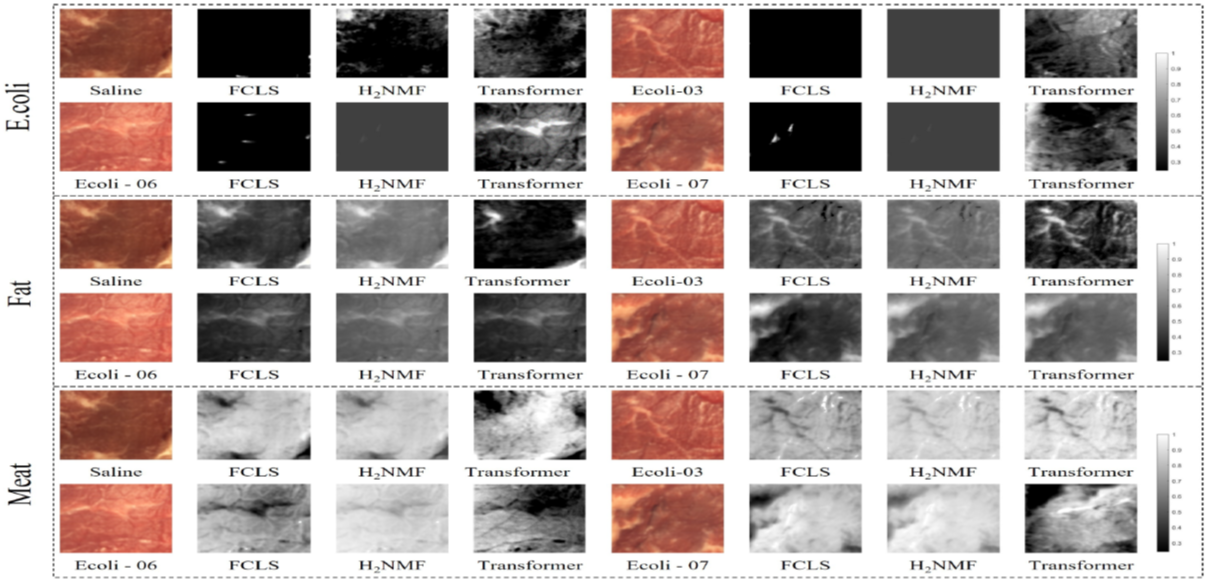

3D Hyperspectral Analysis of Meat to Identify Meat Contamination:

The “3D Hyperspectral Analysis of Meat to Identify Meat Contamination” project, led by Dr. Ali Zia’s team, marks a significant stride in the application of hyperspectral imaging to bolster food safety and quality in the meat industry. In a groundbreaking study, the team has established a benchmark for hyperspectral unmixing aimed at the nuanced detection of Escherichia coli (E. coli) within steak images. By employing a pioneering hyperspectral dataset, specifically curated for unmixing faint signals, the project assesses various algorithms, spanning from classical to cutting-edge deep learning models like transformers and convolutional neural networks (CNNs). This initiative is pivotal in identifying subtle spectral differences indicative of contamination, undetectable by the human eye, by harnessing both visible and near-infrared light spectrums. The rigorous analysis performed across diverse E. coli concentrations and noise levels offers an exhaustive evaluation of the techniques, revealing the Transformer architecture’s exceptional promise in complex detection scenarios. Such advancements ensure rapid, non-invasive contamination detection, crucial for public health protection and sustaining consumer confidence in meat products.

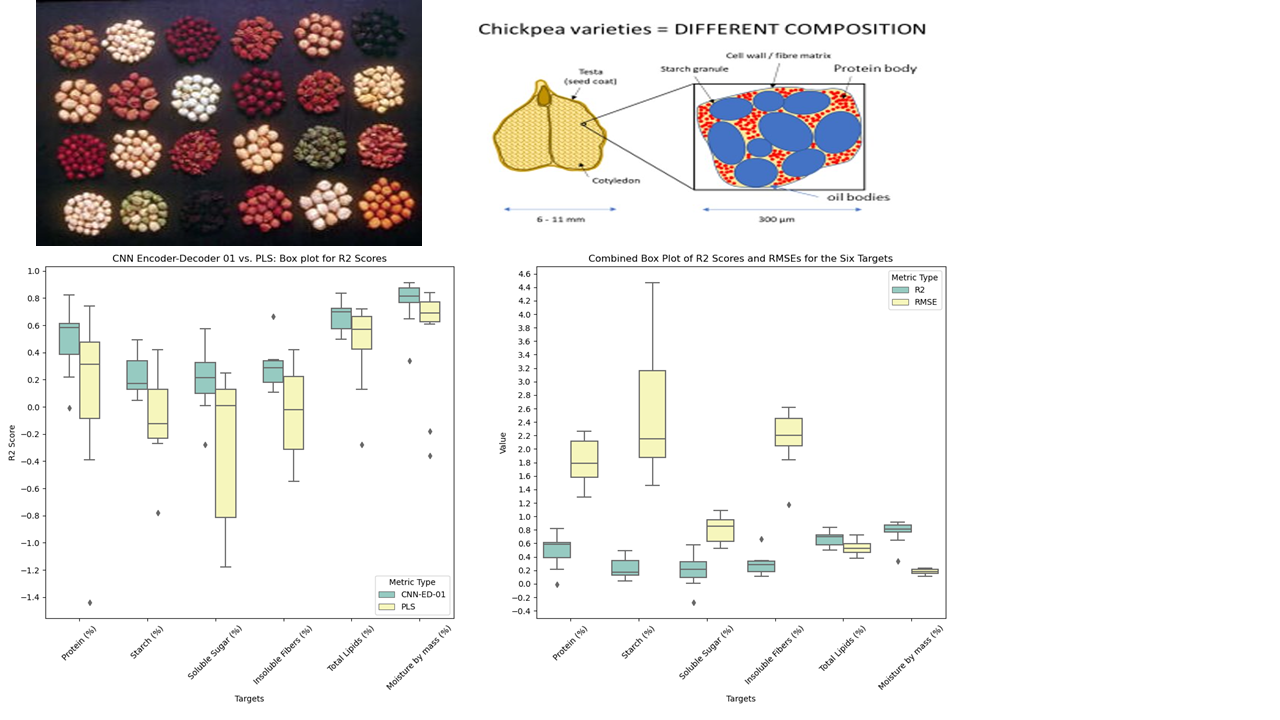

Chickpea Spectral Profiling:

The Chickpea Variety Profiling using Hyperspectral Data project, conducted by Dr. Ali Zia and his team, delves into the spectral analysis of different chickpea varieties. By harnessing hyperspectral imaging, the project captures detailed spectral information beyond the visible spectrum. This data, comprising numerous bands, is analyzed using various deep-learning techniques to classify chickpea varieties based on their protein content. The ability to profile chickpeas on such a granular level could significantly advance agricultural practices and crop selection, leading to enhanced food quality and production efficiency

Beneficial Insect Spreading:

The Beneficial Insect Spreading project employs advanced detection algorithms (YOLOv5 and DETR) to accurately count insects and larvae from mixtures dispersed by unmanned aerial vehicles (UAVs). This innovative approach harnesses the power of machine learning and computer vision to analyze aerial footage or images captured during the dispersal process. By distinguishing between different types of beneficial insects and their larvae within the mix, the project aims to optimize the distribution of these beneficial organisms across agricultural fields. This targeted deployment helps in natural pest control, enhancing crop health and yield while reducing the reliance on chemical pesticides. The technology’s precision and efficiency make it a valuable tool in modern, sustainable agriculture practices, promoting ecological balance and supporting farmers in achieving more productive and environmentally friendly farming methods.

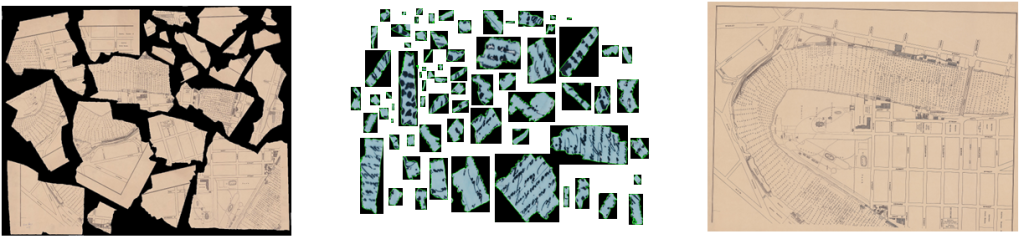

Document Reconstruction:

The Archive Document Reconstruction project is a sophisticated venture leveraging the synergistic potential of computer vision and machine learning to meticulously restore and digitalize historical documents. The project aims to detect and correct physical damages such as tears, creases, and faded text, ensuring that the valuable content is not only preserved but also made more accessible. Machine learning models, trained on vast datasets of archival materials, can intelligently reconstruct missing content while maintaining the document’s original style and integrity. The convergence of these technologies not only safeguards cultural heritage but also facilitates researchers and historians to explore and interact with the past in unprecedented detail, transforming fragile paper relics into resilient digital records.

Determining Mosaic Resilience in Sugarcane Plants using Hyperspectral Images:

This study utilizes hyperspectral imaging to identify and classify the resistance of sugarcane varieties to mosaic virus disease. Leveraging the near-infrared spectrum, the project aims to pinpoint resistant strains efficiently, a task that poses significant challenges due to the subtlety of visible symptoms across different plants. The research deploys advanced machine learning techniques, including deep learning and convolutional neural networks like ResNet, to dissect and utilize the rich spectral data captured by hyperspectral imaging. This approach has already shown promising results in distinguishing resistant sugarcane varieties, providing a much-needed tool for farmers to preemptively manage and curb the spread of this disease, which could potentially lead to improved yield and sustainability in the sugarcane industry.

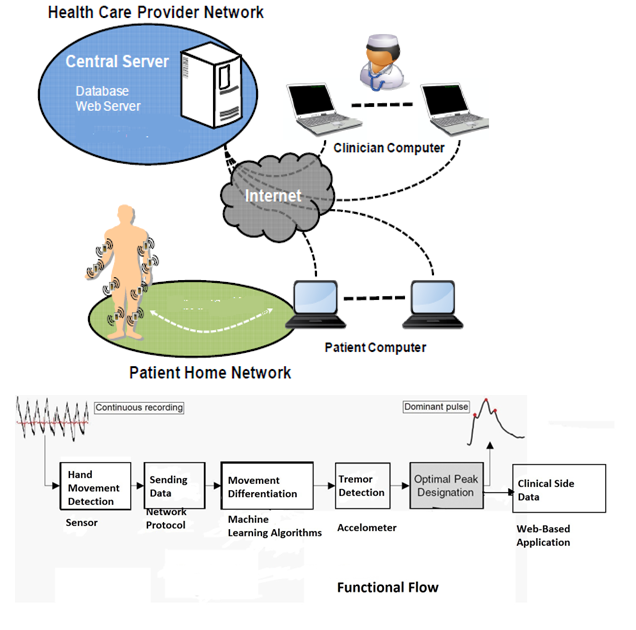

Parkinson’s Patient Monitoring System:

The “Remote Parkinson Patient Behavioral Analysis” project, spearheaded by Dr. Ali Zia at the COMSATS Institute of Information Technology, Pakistan, has successfully developed and deployed a novel system for remote monitoring and analysis of Parkinson’s Disease (PD) patients. This pioneering project utilized wireless inertial measurement units and advanced camera technology to distinguish normal movements from Parkinsonian tremors. By employing algorithms to interpret sensor data, movements were classified based on the Unified Parkinson Disease Rating Scale (UPDRS), facilitating accurate remote patient assessments. This initiative, in Pakistan, has significantly advanced PD patient care by enabling real-time monitoring and immediate response to abnormal movement patterns, showcasing the potential of integrating Artificial Intelligence with sensor and wireless technologies to improve the quality of life for those living with Parkinson’s disease.

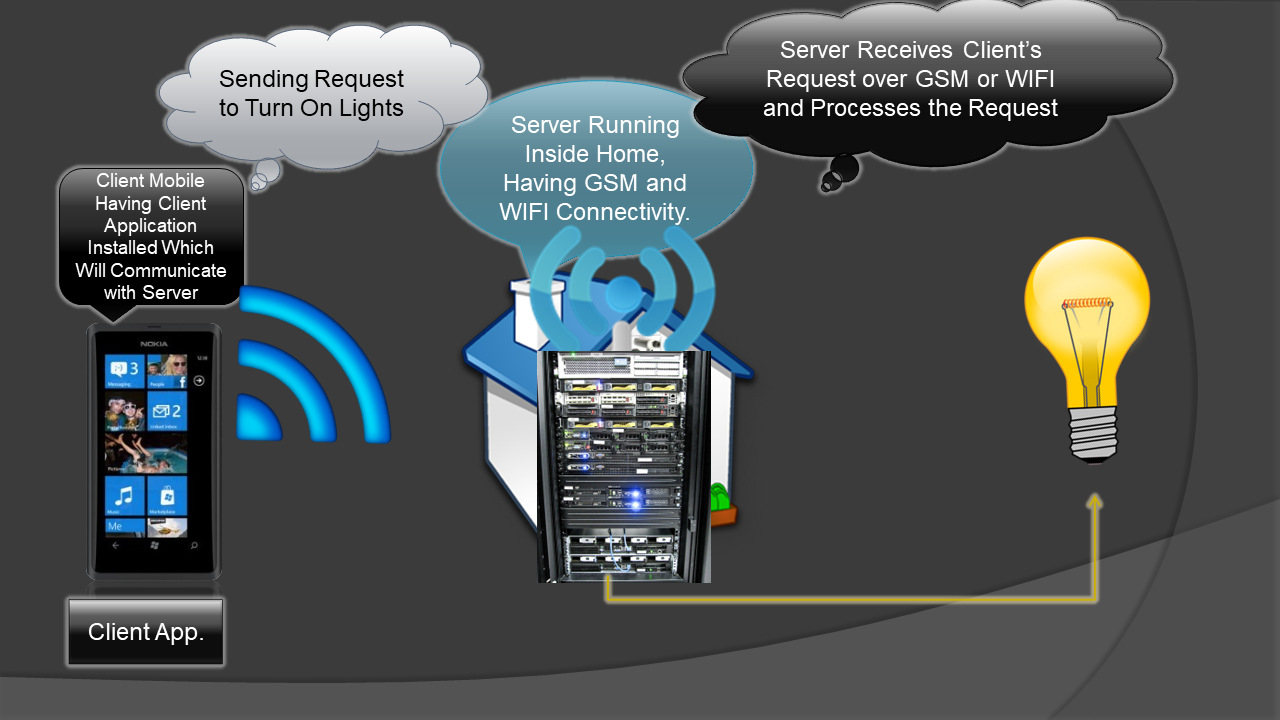

Smart Home Automation and Personalization:

Under the leadership of Dr. Ali Zia, the “Smart Home Automation and Personalization” project successfully integrated advanced technology to create an intelligent home environment. This project encompassed a wide array of functionalities including face detection, face recognition, image processing, and security automation via both Wi-Fi and web services. The system also featured an alarm system, number plate detection, smoke detection, and the integration of IP cameras for enhanced security and monitoring. Utilizing timers and multithreading for efficient operation, the project ensured that motion detection and video streaming capabilities were optimized for both web and mobile platforms. This comprehensive approach not only elevated home security but also provided a customizable and interactive experience for residents, showcasing Dr. Zia’s commitment to leveraging Artificial Intelligence and machine learning in practical, everyday applications.

Other Notable Projects:

- Interactive human facial emotion recognition system.

- Robot path identification and map construction (awarded best project, 2011).

- Computer control using finger movement.

- Interactive human emotion recognition through voice and facial expressions.

- Content-based video retrieval using object classification.

- Smart traffic surveillance and vehicular identification system.

- Autonomous mobile robot (car) path identification and obstacle avoidance.

- Camera surveillance using mobile phones.

- 3D area reconstruction using autonomous mobile robots.